|

Introduction

The project

had originally focused on providing

a set of easy-to-reuse 3D graphics rendering and interaction operations in order

to enable a 3D interactive graphics developer to write programs that take

advantage of rapidly growing powerful graphics hardware featuers

with minimal efforts. The project

had originally focused on providing

a set of easy-to-reuse 3D graphics rendering and interaction operations in order

to enable a 3D interactive graphics developer to write programs that take

advantage of rapidly growing powerful graphics hardware featuers

with minimal efforts.

The development of applications in the 3D

domain requires writing programs from scratch in most of cases.

This makes the process difficult and time-consuming. The results

of this project should, hence, be of

particular interest to a wide range of 3D application developers,

covering from

the experts in 3D graphics system to the novices that

can appreciate the interactivity of their own 3D program

without requiring deep knowledge in the rendering and intereaction

mechnisms.

To identify a common set of objects and methods

necessary to produce interactive 3D applications, our investigation

has been oriented in the direction of devising a clearer

frontier between the application-dependent information and

the interaction mechnisms.

More than the features provided by the well-known interface to 3D

graphics hardware,

OpenGL, whose ultimate goal is to generate a photorealistic

representation of a 3D scene on the 2D screen, our goal is to

go beyond the conversion of 3D models into 2D array of pixels.

Through interactions with the drawn pixels on the screen via

a mouse, we would

like to manipulate the objects in their own "3D world" as

we have them in our hand.

More than the features provided by the well-known interface to 3D

graphics hardware,

OpenGL, whose ultimate goal is to generate a photorealistic

representation of a 3D scene on the 2D screen, our goal is to

go beyond the conversion of 3D models into 2D array of pixels.

Through interactions with the drawn pixels on the screen via

a mouse, we would

like to manipulate the objects in their own "3D world" as

we have them in our hand.

Differently from architectures, such as the OpenInventor, that

integrate the application data with

the rendering procedures so that the interactions with relatively

complicated shapes would benefit from the existence of geometric

modeling techniques such as intersection and deformation, we

propose in this

project to reuse the application-independent data generated along

the graphics rendering

pipeline, such as differential geometric properties, for providing

sophisticated 3D interaction facilities.

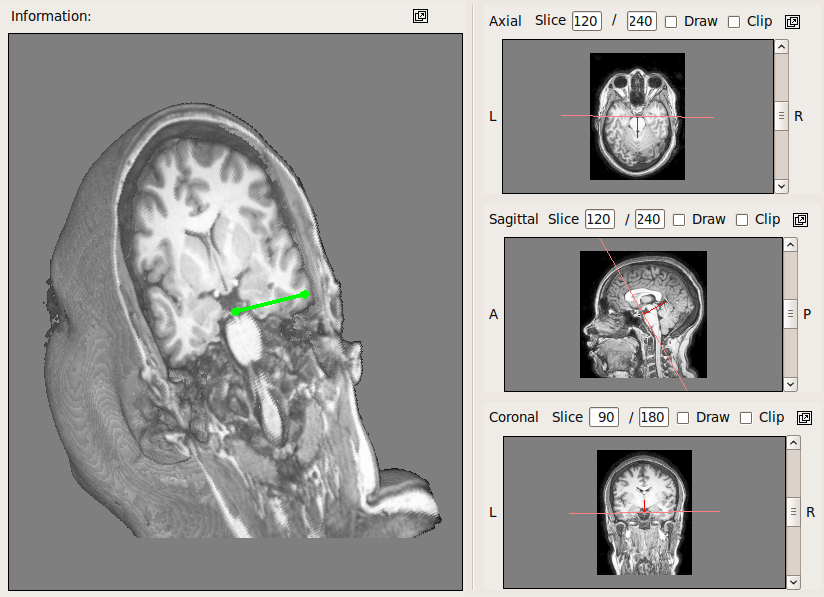

On the basis of our proposal, we developed a GPU-based

architecture for performing basic interaction functionalities, such

as cursor picking and cursor snapping, without requiring a copy of the

surface model in video memory. This is because that

the surface geometry with which we interact may either not exist in the

system memory, such as 3D images, or be

deformed on the vertex shader, on the fragment shader, or on both

along the rendering pipeline. The results encouraged us to

move to medical applications and to implement on top of

Spvolren a curvilinear reformatting tool and

a measuring tool for 3D medical scans in the DICOM.

On the basis of our proposal, we developed a GPU-based

architecture for performing basic interaction functionalities, such

as cursor picking and cursor snapping, without requiring a copy of the

surface model in video memory. This is because that

the surface geometry with which we interact may either not exist in the

system memory, such as 3D images, or be

deformed on the vertex shader, on the fragment shader, or on both

along the rendering pipeline. The results encouraged us to

move to medical applications and to implement on top of

Spvolren a curvilinear reformatting tool and

a measuring tool for 3D medical scans in the DICOM.

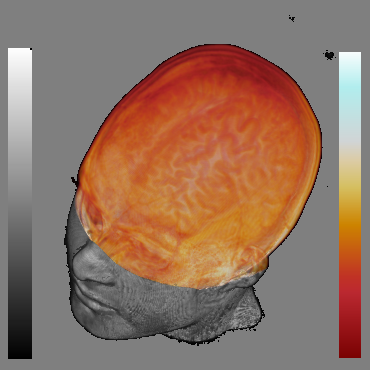

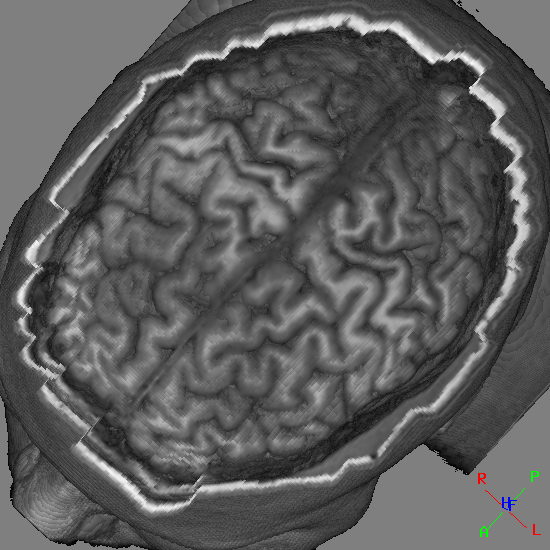

Curvilinear reformatting consists in peeling off layers parallel to the brain surface like peeling an onion such that the cortical structure can be gradually visualized from the view of the superior surface of the brain after the removal of the meninge and the surface vasculature. In our implementation a user can selectively paint the region to be peeled on the scalp and control the peeling depth. The structute that bridges the painted pixels and the scanned voxels is a triangula mesh. Through the input of a sequence of points on the visible surface via mouse, the length of a piecewise-linear curve may be calculated. Length measurments are voxel-based.

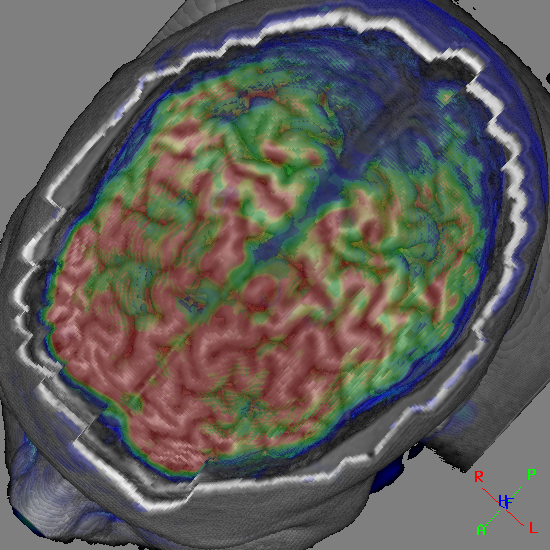

In cooperation with the neuroscientists of Laboratório de Neuroimagem we have developed three interactive tools that may improve the diagnosis of focal cortical dysplasia, which is the most common causes of pharmacoresistant epilepsies: an interactive editor for transfer functions that map the physical signals onto graphical attributes (color and opacity), a volumetric probe with which one can highlight any spatial region of interest with distinguishing colors, and a controllable lens in terms of its magnification factor and its placement to provide an alternative view of the data in a specific display area. From usability tests, we have realized that slice-based investigation is still the preferred one. In cooperation with the neuroscientists of Laboratório de Neuroimagem we have developed three interactive tools that may improve the diagnosis of focal cortical dysplasia, which is the most common causes of pharmacoresistant epilepsies: an interactive editor for transfer functions that map the physical signals onto graphical attributes (color and opacity), a volumetric probe with which one can highlight any spatial region of interest with distinguishing colors, and a controllable lens in terms of its magnification factor and its placement to provide an alternative view of the data in a specific display area. From usability tests, we have realized that slice-based investigation is still the preferred one.

The demand for arbitrary angulation in multiplanar reformatting to get a slice view that cannot be scanned leads us to develop an interactive tool that allows a neuro-scientist to reformat any scanned volume along a flat plane in arbitrary orientation. The tool was so designed that a user has control both in 2D and 3D views.

In parallel, we also research rigid multi-modal image registration and fusion in order to build a multi-modal interactive visualization. This is because that multi-modality imaging provides a way to generate non-invasively and simultaneously structural, anatomical and functional views of a brain. Because of its potential application on non-rigid registration, we implemented a technique that is based on mutual information. Even we adopted the well-recommended solution for each stage, we observe that the technique still fails to align some subtle details.

In parallel, we also research rigid multi-modal image registration and fusion in order to build a multi-modal interactive visualization. This is because that multi-modality imaging provides a way to generate non-invasively and simultaneously structural, anatomical and functional views of a brain. Because of its potential application on non-rigid registration, we implemented a technique that is based on mutual information. Even we adopted the well-recommended solution for each stage, we observe that the technique still fails to align some subtle details.

Once pre-alignment had not been deeply explored by the community, we decided to investigate its effects on the registration accuracy. From theoretical point of view, the closer are the floating and reference volumes, the more precise will be the convergence of a floating volume to the reference one in our optimization algorithm. In practice, we asked ourselves which algorithm could robustly recognize any head's shapes and pre-align them in their native space? We devised an algorithm for which an expert takes an important role. An expert only should provide a pair of correspondences and the machine does the rest of the job.

These results, together with the improvements we did on our curvilinear reformatting algorithm, led us to a new idea: to combine them for visual analysis of the anatomical and functional neuroimages in order to detect subtle dysplastic lesions. For efficient mult-volume rendering we devised a novel approach to correctly fetch the complementary multimodal data from the scanned volumes that are not necessarily axis-aligned, with the same size and with the same resolution. Differently from the previous works our GPU-based implementation does not require any kind of pre-classification.

Any comments about this project will be very appreciated.

|